Tutorial - Shape Reconstruction via Scatterometry

This tutorial shows how PyThia can be used to approximate the scatterometry measurement process and conduct a global sensitivity analysis of the model.

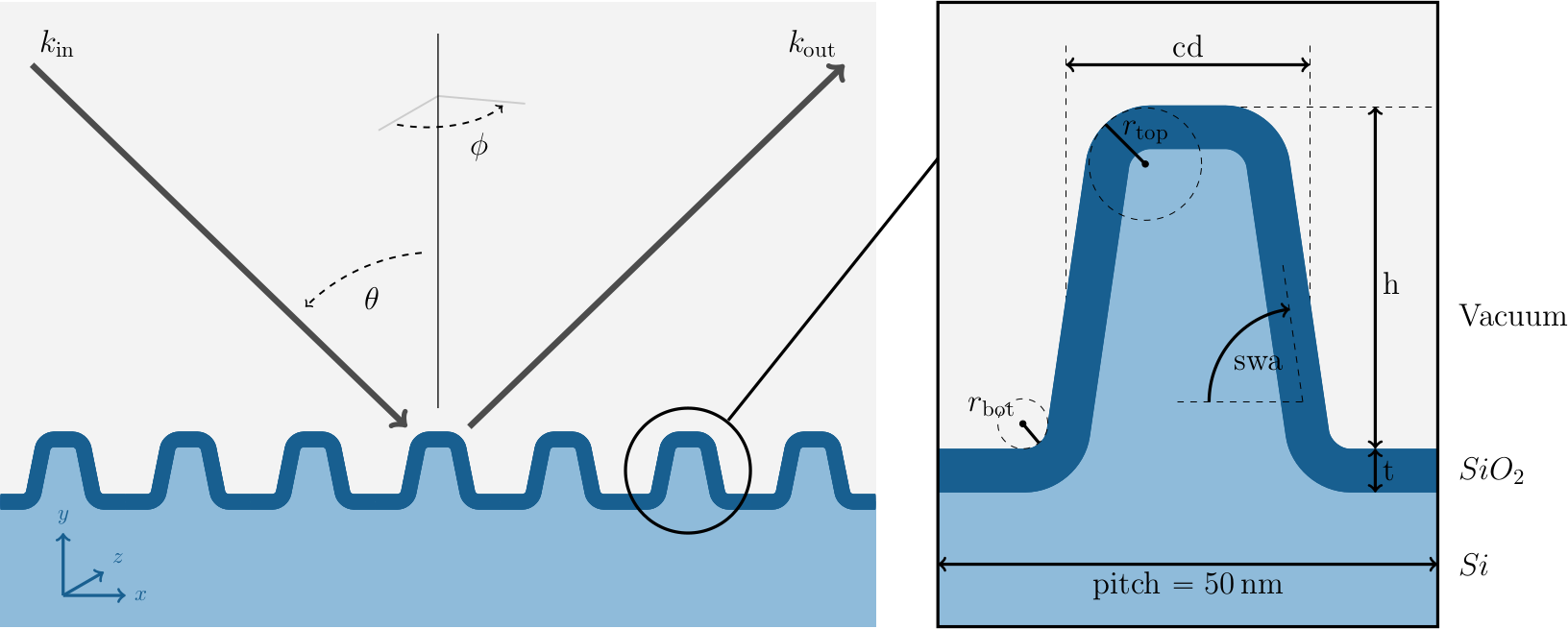

In this experiment a silicon line grating is irradiated by an electromagnetic wave under different angles of incidence \(\theta\) and azimuthal orientations \(\phi\). The aim of this experiment is to build a surrogate for the map from the six shape parameters \(x = (\mathit{h}, \mathit{cd}, \mathit{swa}, \mathit{t}, \mathit{r}_{\mathrm{top}}, \mathit{r}_{\mathrm{bot}})\) to the detected scattered far field of the electromagnetic wave. The experimental setup is visualized in the image below.

Note

If you want to run the source code for this tutorial yourself, you need to download the tutorial data. This tutorial assumes that the data are located in the same directory as the source code.

Surrogate

We start by loading the numpy and pythia packages.

import numpy as np

import pythia as pt

Generating training data from the forward problem requires solving Maxwell’s equations with finite elements, which is very intricate and time consuming. We assume the training data we rely on in this tutorial have been previously generated in an “offline” step, on a different machine using the JCMsuite software. Hence we can simply load the data.

x_data = np.load("x_train.npy") # (10_000, 6)

y_data = np.load("y_train.npy") # (90, 10_000, 2)

w_data = np.load("w_train.npy") # (10_000, )

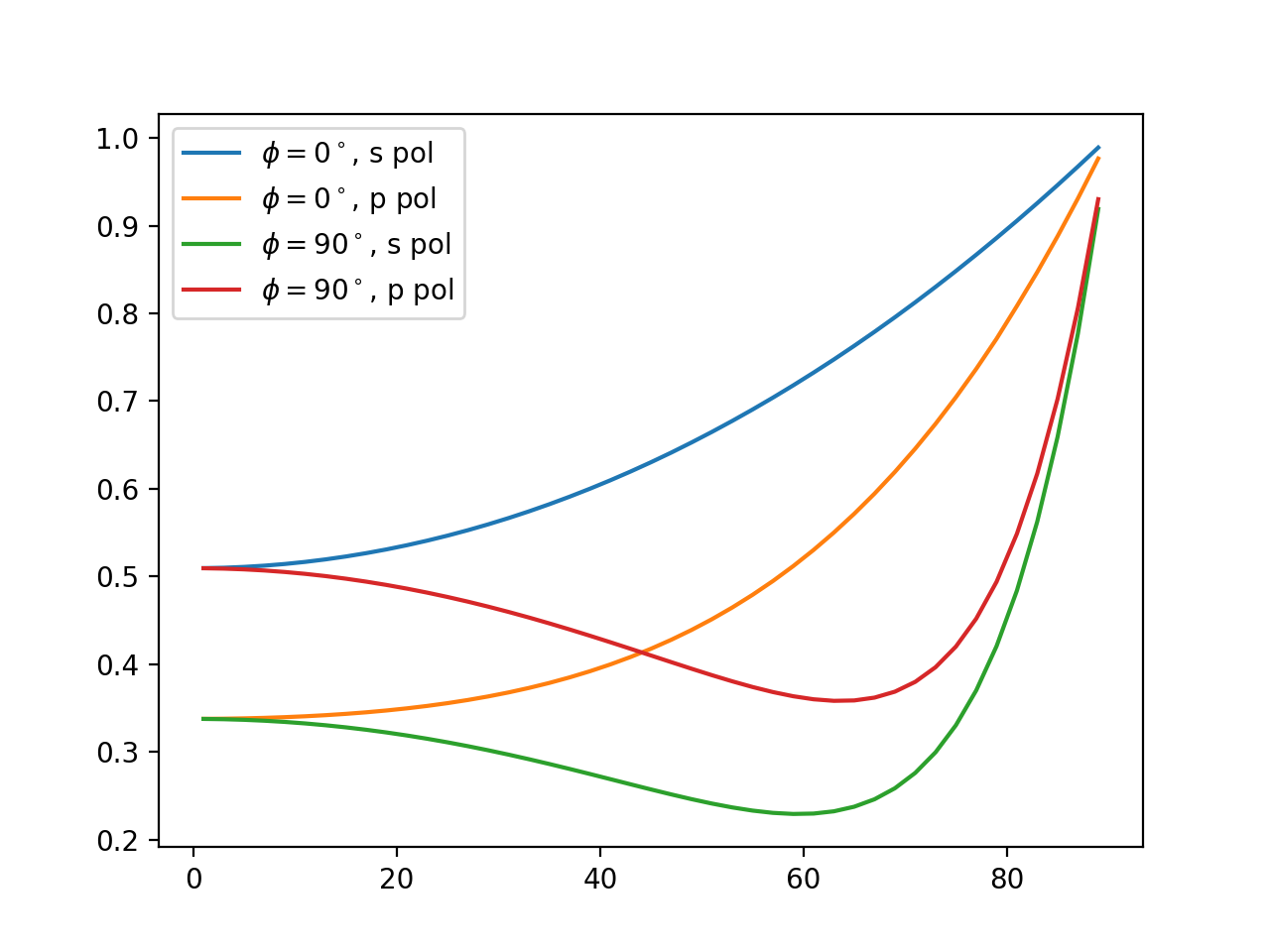

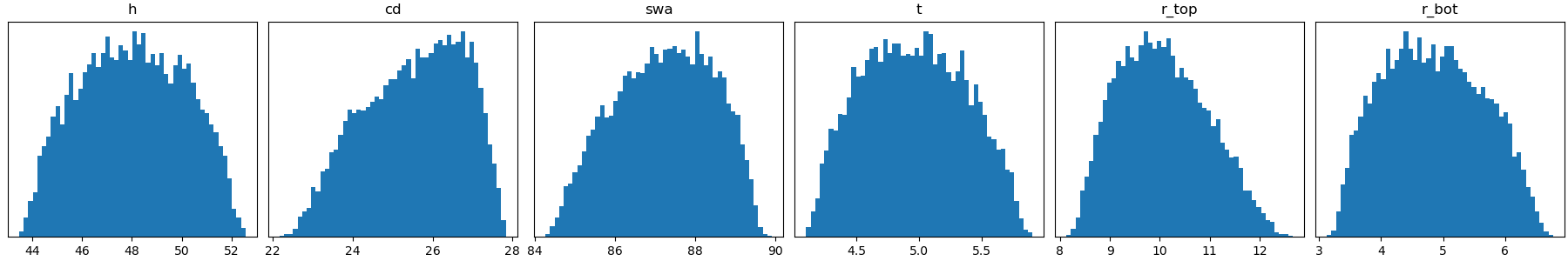

For the simulations we assumed Beta distributed parameters in reasonable parameter domains, which is also the density we drew the samples from. In particular, this implies that the weights are simply \(w_i = 1 / N\), where \(N\) is the number of samples (i.e., \(10.000\) in this case). The scattered intensity signal data consist of 4 curves (2 different azimuthal angles \(\phi\) with s- and p- polarized wave signals each) where each curve is evaluated in 45 points (angles of incidence \(\theta\)). The continuous curves look similar to the image below.

As a first step to compute a polynomial chaos expansion, we need to define the parameters using PyThias Parameter class.

We can use the parameter.npy file to access the parameter information.

params = []

for dct in np.load("parameter.npy", allow_pickle=True):

params.append(

pt.parameter.Parameter(

name=dct["_name"],

domain=dct["_dom"],

distribution=dct["_dist"],

alpha=dct["_alpha"],

beta=dct["_beta"],

)

)

Below you can find a table and an image listing the relevant parameter information.

# |

Name |

distribution |

\(\alpha\) |

\(\beta\) |

|---|---|---|---|---|

1 |

\(\mathit{h}\) |

\(\mathrm{Beta}_{\alpha,\beta}([43, 53])\) |

\(10.0\) |

\(9.67\) |

2 |

\(\mathit{cd}\) |

\(\mathrm{Beta}_{\alpha,\beta}([22, 28])\) |

\(10.0\) |

\(7.76\) |

3 |

\(\mathit{swa}\) |

\(\mathrm{Beta}_{\alpha,\beta}([84, 90])\) |

\(10.0\) |

\(10.13\) |

4 |

\(\mathit{t}\) |

\(\mathrm{Beta}_{\alpha,\beta}([4, 6])\) |

\(10.0\) |

\(11.29\) |

5 |

\(\mathit{r}_{\mathrm{top}}\) |

\(\mathrm{Beta}_{\alpha,\beta}([8, 13])\) |

\(10.0\) |

\(11.10\) |

6 |

\(\mathit{r}_{\mathrm{bot}}\) |

\(\mathrm{Beta}_{\alpha,\beta}([3, 7])\) |

\(10.0\) |

\(12.35\) |

Next, we split the available data into a training and a test set, using roughly \(8.000\) data points for the training.

# split training/test dataset

split = int(0.8 * x_data.shape[0])

# training data

x_train = x_data[:split]

y_train = y_data[:split]

w_train = w_data[:split]

# test data

x_test = x_data[split:]

y_test = y_data[split:]

Now that we have our parameters defined and the training data in place, we only need to define which expansion terms we want to include into our expansion. In this case, we choose a simplex index set to bound the total polynomial degree of the expansion by four. This leaves us with \(210\) polynomial chaos terms in our expansion.

indices = pt.index.simplex_set(dimension=len(params), maximum=4)

index_set = pt.index.IndexSet(indices)

Finally, we have all the ingredients to compute our polynomial chaos surrogate, which requires only one line of code.

surrogate = pt.chaos.PolynomialChaos(params, index_set, x_train, w_train, y_train)

Evaluating the surrogate on our test set with

y_approx = surrogate.eval(x_test)

allows us compute an empirical approximation of the \(L^2\)-error (MSE), which is approximately at \(5*10^{-3}\). This is ok, since the measurement error of the observation we will investigate for the inverse problem will be at least one order of magnitude larger.

Sensitivity analysis

There are essentially two different types of sensitivity analyses, local and global.

Local sensitivity analysis typicalls considers a change in the signal by a small variation in the input around a specific point.

Given the polynomial chaos surrogate, computation of the local sensitivities is of course very easy as derivatives of the polynomial are given analytically.

To compute the local sensitivities of the second parameter (\(\mathit{cd}\)) in all points of our test set, i.e. \(\partial/\partial x_{\mathit{cd}}\ f(x_{\mathrm{test}})\), we can use the optional partial argument of the eval() function

local_sensitivity = surrogate.eval(x_test, partial=[0, 1, 0, 0, 0, 0])

However, computing local sensitivities yields only a very limited image of the signal dependency on change in the individual parameter or combinations thereof.

When it comes to estimating whether the reconstruction of the hidden parameters will be possible it is often more reliable to consider the overall dependence of the signal on the parameters.

There are various types of global sensitivity analysis methods, but essentially all of them rely on integrating the target function over the high-dimensional parameter space, which is typically not feasible.

The polynomial chaos expansion, however, is closely connected to the so called Sobol indices, which allows us to compute these indices by squaring and summing different subsets of our expansion coefficients.

The Sobol coefficients are automatically computed with the polynomial chaos approximation and can be accessed via the surrogate.sobol attribute, which is ordered according to the list of Sobol tuples in index_set.sobol_tuples.

As the signal data consist of multiple points on different curves, we get the Sobol indices for each point of our signal.

As this is hard to visualize however, we can have a look on the maximal value each Sobol index takes over the signal points.

The 10 most significant Sobol indices are listed in the table below.

# |

Sobol tuple |

Parameter combinations |

Sobol index value (max) |

|---|---|---|---|

1 |

\((2,)\) |

\((\mathit{cd},)\) |

\(0.9853\) |

2 |

\((4,)\) |

\((\mathit{t},)\) |

\(0.8082\) |

3 |

\((1,)\) |

\((\mathit{h},)\) |

\(0.3906\) |

4 |

\((3,)\) |

\((\mathit{swa},)\) |

\(0.2462\) |

5 |

\((2, 5)\) |

\((\mathit{cd}, \mathit{r}_{\mathrm{top}})\) |

\(0.0332\) |

6 |

\((5,)\) |

\((\mathit{r}_{\mathrm{top}},)\) |

\(0.0240\) |

7 |

\((3, 5)\) |

\((\mathit{swa}, \mathit{r}_{\mathrm{top}})\) |

\(0.0137\) |

8 |

\((1, 2)\) |

\((\mathit{h}, \mathit{cd})\) |

\(0.0065\) |

9 |

\((6,)\) |

\((\mathit{r}_{\mathrm{bot}},)\) |

\(0.0059\) |

10 |

\((2, 3, 5)\) |

\((\mathit{cd}, \mathit{swa}, \mathit{r}_{\mathrm{top}})\) |

\(0.0030\) |

We see that all single parameter Sobol indices are among the top ten, implying that changes in single parameters are among the most influential. Moreover, looking at the actual (maximal) values, we can assume that the first 4 parameters (\(\mathit{h}\), \(\mathit{cd}\), \(\mathit{swa}\), \(\mathit{t}\)) can be reconstructed very well, whereas the sensitivities of the corner roundings \(\mathit{r}_{\mathrm{top}}\) and \(\mathit{r}_{\mathrm{bot}}\) imply that we will get large uncertainties in the reconstruction.

This concludes the tutorial.

Complete Script

The complete source code listed below is located in the tutorials/ subdirectory of the repository root.

1"""

2File: tutorials/tutorial_scat.py

3Author: Nando Hegemann

4Gitlab: https://gitlab.com/Nando-Hegemann

5Description: Tutorial - Shape Reconstruction via Scatterometry.

6SPDX-License-Identifier: LGPL-3.0-or-later OR Hippocratic-3.0-ECO-MEDIA-MIL

7"""

8import numpy as np

9import pythia as pt

10

11angles = np.load("angles.npy") # (90, 2)

12

13# reshape

14angles = np.concatenate([angles, angles], axis=0) # (180, 2)

15angle_idxs = [range(45), range(45, 90), range(90, 135), range(135, 180)]

16angle_labels = [

17 "$\\phi=0^\\circ$, s pol",

18 "$\\phi=0^\\circ$, p pol",

19 "$\\phi=90^\\circ$, s pol",

20 "$\\phi=90^\\circ$, p pol",

21]

22print("angle info:")

23print(f" azimuth phi: {np.unique(angles[:,0])}")

24print(f" incidence theta: {np.unique(angles[:,1])}")

25

26# load data

27x_data = np.load("x_train.npy") # (10_000, 6)

28y_data = np.load("y_train.npy") # (90, 10_000, 2)

29w_data = np.load("w_train.npy") # (10_000, )

30

31# reshape y_data

32y_data = np.concatenate(

33 [y_data[:45, :, 0], y_data[:45, :, 1], y_data[45:, :, 0], y_data[45:, :, 1]], axis=0

34).T # (10_000, 180)

35print("data info:")

36print(f" x_data.shape: {x_data.shape}")

37print(f" w_data.shape: {w_data.shape}")

38print(f" y_data.shape: {y_data.shape}")

39

40# load parameters

41params = []

42for dct in np.load("parameter.npy", allow_pickle=True):

43 params.append(

44 pt.parameter.Parameter(

45 name=dct["_name"],

46 domain=dct["_dom"],

47 distribution=dct["_dist"],

48 alpha=dct["_alpha"],

49 beta=dct["_beta"],

50 )

51 )

52

53print("parameter info:")

54print(" parameter dist domain alpha beta")

55print(" " + "-" * 42)

56for param in params:

57 print(

58 f" {param.name:<8} ",

59 f"{param.distribution:<5} ",

60 f"{str(np.array(param.domain, dtype=int)):<7} ",

61 f"{param.alpha:<4.2f} ",

62 f"{param.beta:<4.2f}",

63 )

64

65# split training/test dataset

66split = int(0.8 * x_data.shape[0])

67

68# training data

69x_train = x_data[:split]

70y_train = y_data[:split]

71w_train = w_data[:split]

72

73# test data

74x_test = x_data[split:]

75y_test = y_data[split:]

76

77# define multiindex set

78indices = pt.index.simplex_set(dimension=len(params), maximum=4)

79index_set = pt.index.IndexSet(indices)

80

81print("Multiindex information:")

82print(f" number of indices: {index_set.shape[0]}")

83print(f" dimension: {index_set.shape[1]}")

84print(f" maximum dimension: {index_set.max}")

85print(f" number of sobol indices: {len(index_set.sobol_tuples)}")

86

87print("compute PC surrogate")

88# run pc approximation

89surrogate = pt.chaos.PolynomialChaos(params, index_set, x_train, w_train, y_train)

90# test approximation error

91y_approx = surrogate.eval(x_test)

92

93# L2-L2 error

94err_abs = np.sum(np.linalg.norm(y_test - y_approx, axis=1)) / y_test.shape[0]

95err_rel = np.sum(np.linalg.norm((y_test - y_approx) / y_test, axis=1)) / y_test.shape[0]

96print(f" L2-L2 error: {err_abs:4.2e} (abs), {err_rel:4.2e} (rel)")

97

98# C0-L2 error

99err_abs = np.sum(np.max(np.abs(y_test - y_approx), axis=1)) / y_test.shape[0]

100err_rel = (

101 np.sum(np.max(np.abs(y_test - y_approx) / np.abs(y_test), axis=1)) / y_test.shape[0]

102)

103print(f" L2-C0 error: {err_abs:4.2e} (abs), {err_rel:4.2e} (rel)")

104

105# global sensitivity analysis

106print("maximal Sobol indices:")

107max_vals = np.max(surrogate.sobol, axis=1)

108l2_vals = np.linalg.norm(surrogate.sobol, axis=1)

109sobol_max = [

110 list(reversed([x for _, x in sorted(zip(max_vals, index_set.sobol_tuples))]))

111]

112sobol_L2 = [

113 list(reversed([x for _, x in sorted(zip(l2_vals, index_set.sobol_tuples))]))

114]

115print(f" {'#':>2} {'max':<10} {'L2':<10}")

116print(" " + "-" * 25)

117for j in range(10):

118 print(f" {j+1:>2} {str(sobol_max[0][j]):<10} {str(sobol_L2[0][j]):<10}")